Recently I watched Karpathy's The spelled-out intro to neural networks and backpropagation: building micrograd and gained a deeper understanding of neural networks and back propagation. The logic is surprisingly concise, as seen from Karpathy's 200-line implementation of an autograd engine. As such, I went ahead and built a neural network on google sheets to test my understanding.

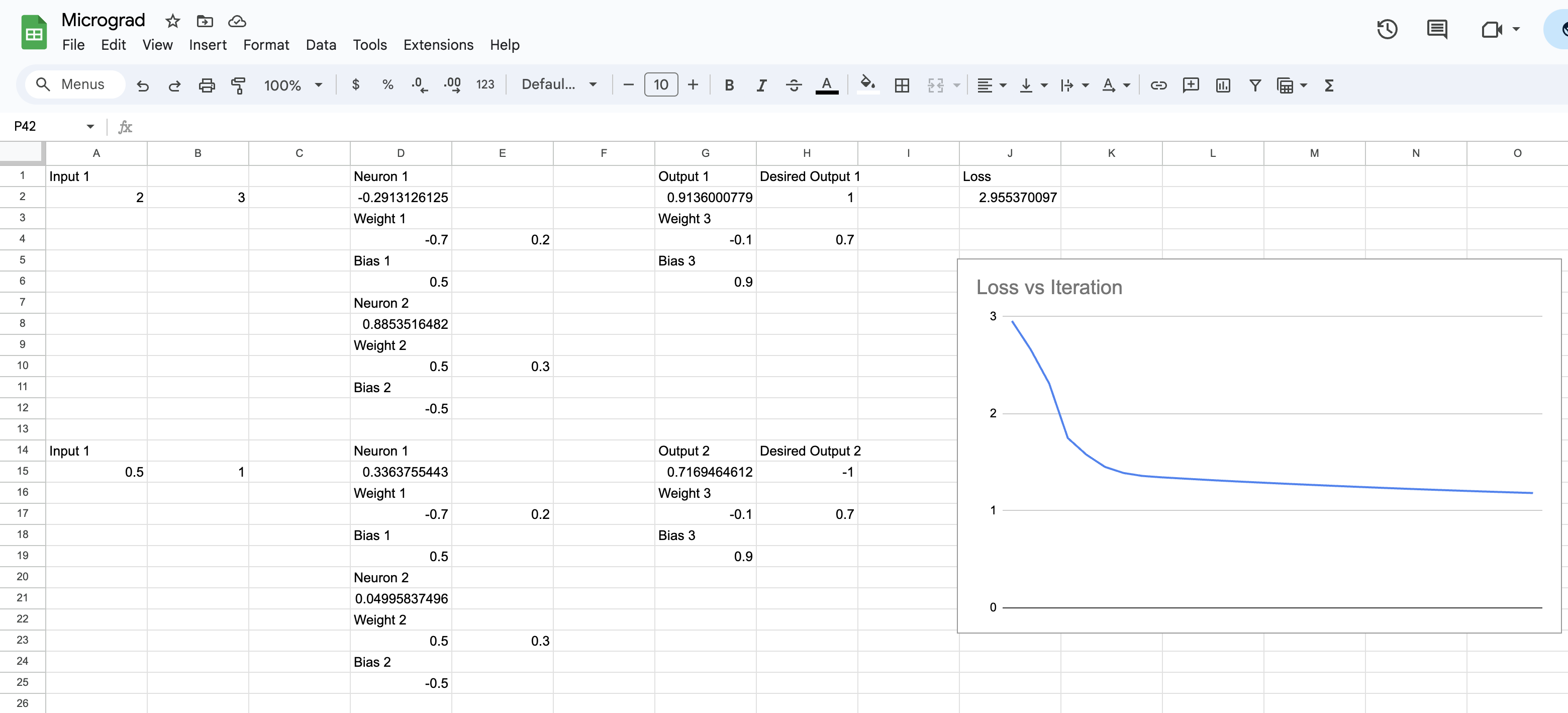

The network consists of 2 inputs, 2 neurons, and an output.

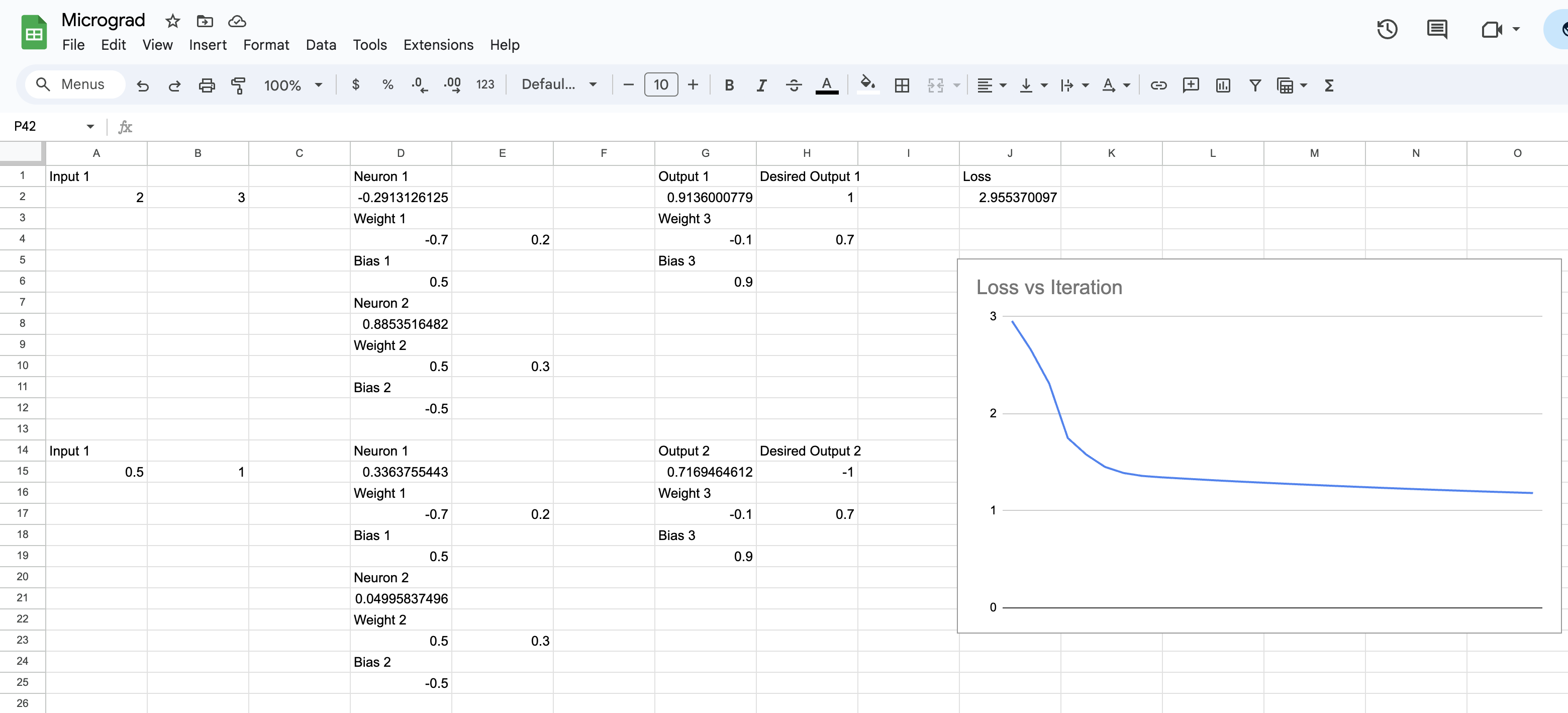

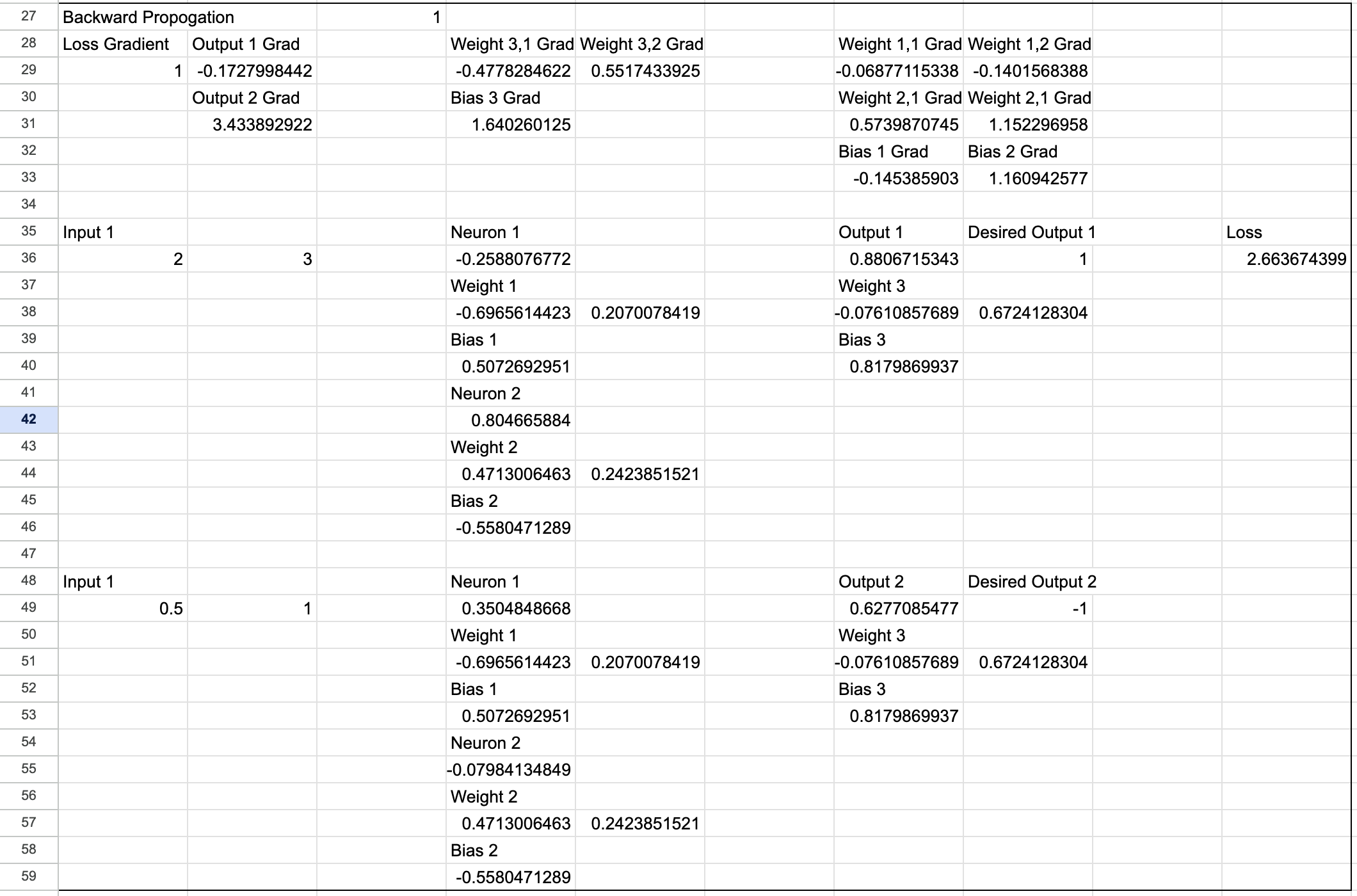

I calculated the forward pass first to obtain the output, and calculate the loss by comparing against the expected output. Then with the loss, I am able to calculate the gradient for each step with respect to the loss. Once I have the gradient for all the weights and biases, I subtract the learning rate multiplied by the gradient to minimize the loss. After several iterations, I am eventually able to lower the loss. Using pytorch and the same number of iterations, I was also able to get a similar loss, so I assume with enough iterations eventually the loss can be lowered to near 0.